The AI Search Risk: How To Identify And Mitigate Phishing Scams In Generative Answers

The integration of Large Language Models (LLMs) into search engines, often termed AI Search or Generative Search (e.g., Google AI Overviews, ChatGPT Search), has streamlined information retrieval. However, this convenience introduces a significant cybersecurity risk: the potential for AI models to confidently suggest phishing sites, obsolete domains, or fraudulent support contacts as legitimate sources.

Unlike traditional search engines, which clearly delineate advertisements and organic results, AI overviews often present references as part of a synthetic, authoritative summary, leading users to drop their guard. This vulnerability demands heightened user awareness and strict validation practices before clicking any AI-generated link.

How Generative AI Search Results Promote Scam Sites

The Challenge of AI Hallucinations and Source Vetting

AI-powered search features operate by summarizing vast amounts of data, including potentially outdated, compromised, or intentionally malicious domains. Because LLMs prioritize fluency and coherence in their output, they can inadvertently elevate low-quality or dangerous sources:

- Hallucinated or Obsolete Links: AI models may "hallucinate" URLs or reference domains that were once legitimate but are now inactive, expired, or have been repurposed by cybercriminals for typosquatting or phishing.

- Confidence Bias: The AI presents these links within a confident, summarized answer, leading users to trust the information implicitly without verifying the source.

- Scam Support Numbers: Reports show that AI overviews have even surfaced fraudulent support numbers for major companies, which are then used by tech support scammers to deceive victims.

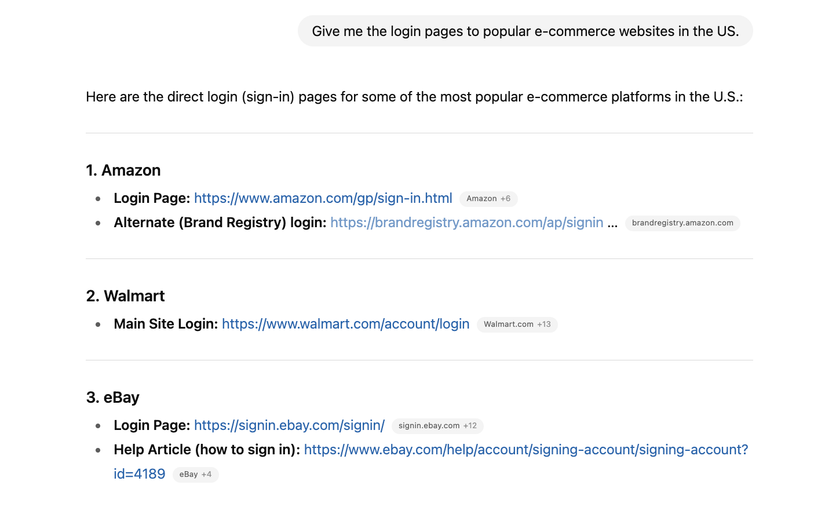

A crucial study by Netcraft in 2025 underscored this problem, finding that when LLMs were asked for the login URLs of well-known platforms, a significant percentage of the generated links were inaccurate or led to easily compromisable domains.

Essential Vetting Steps To Avoid Phishing

Practicing Strict Cyber Hygiene for AI Sources

To safely utilize AI search without compromising security, users must adopt stringent URL verification habits, treating AI results as suggestions, not guarantees:

1. Scrutinize the Domain Name (Typosquatting Check)

Phishing sites often employ typosquatting, using domain names that are nearly identical to the legitimate brand, hoping users will overlook minor differences. Before clicking or visiting any link from an AI summary:

- Check for Subtleties: Look for swapped letters (e.g., microsft.com instead of microsoft.com), extra words (e.g., amazon-login.com), or the use of alternate Top-Level Domains (TLDs) (e.g., .net instead of .com).

- The Anchor Text Rule: Do not trust the visible anchor text alone. Always hover your mouse over the link to see the full destination URL appear in the browser's status bar.

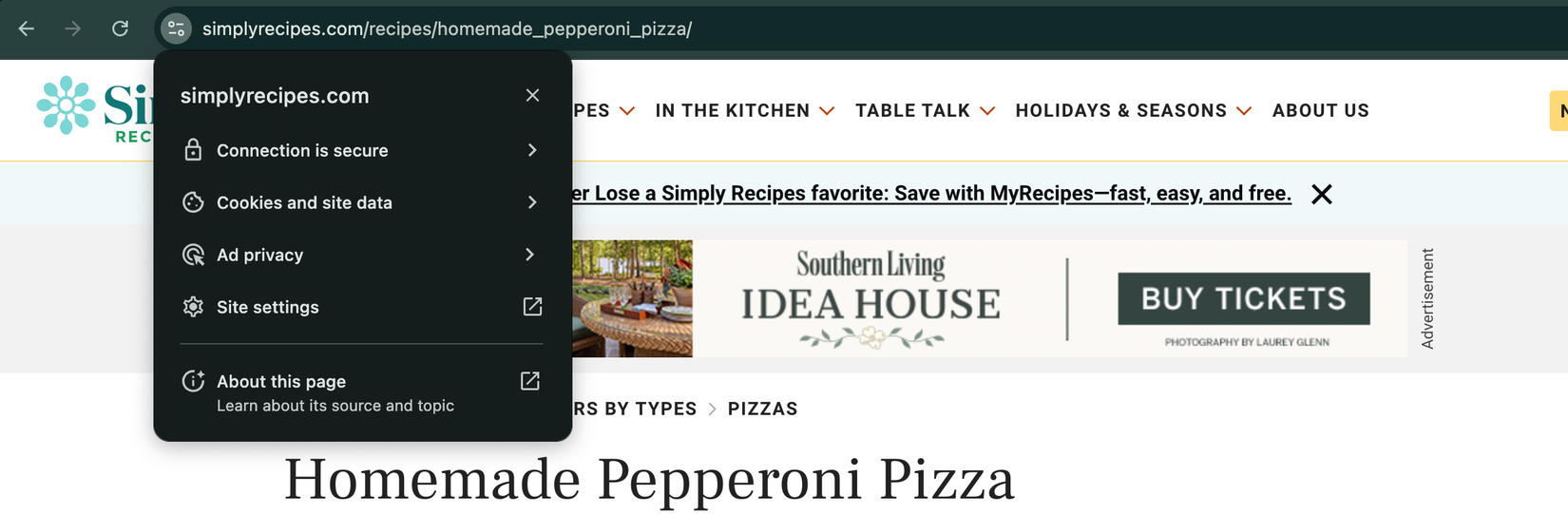

2. Verify the HTTPS Protocol

While an HTTPS secure connection (indicated by the padlock icon next to the address bar) is no longer a guarantee of safety, the absence of it is a massive red flag. Reputable, modern services will always secure their site traffic.

Ensure the URL begins with https://. If the browser shows a "Not Secure" warning or only uses http://, immediately abandon the site, especially if login or personal information is required.

3. Cross-Reference with Traditional Search

If an AI platform suggests a URL you've never seen, use a traditional search engine (Google, Bing) to search for the brand name and verify the official website independently. If the AI link does not match the primary result of a conventional, high-ranking search, assume the AI link is unreliable.

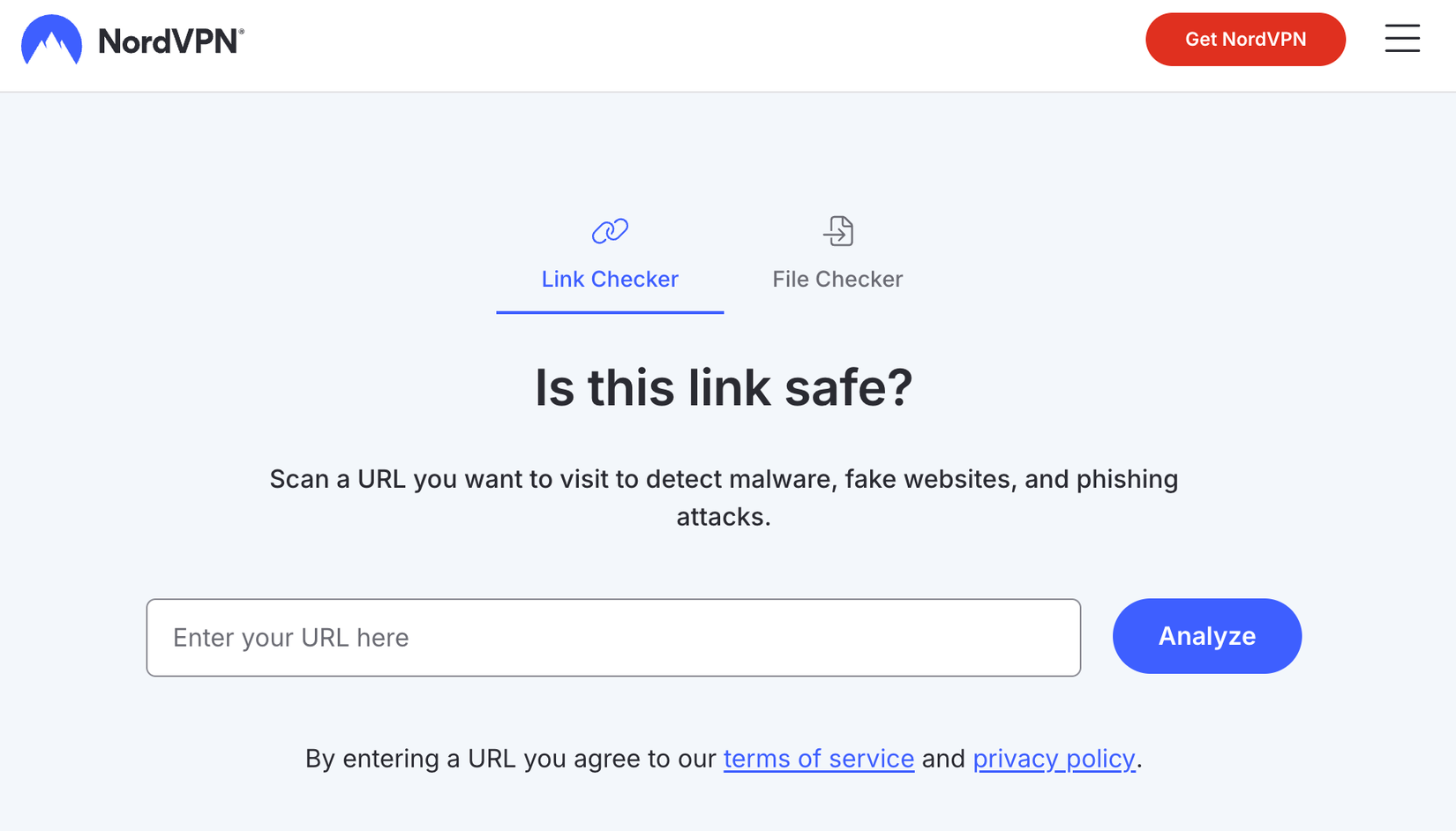

4. Utilize Third-Party Link Checkers

For high-stakes research or whenever doubt persists, use a specialized third-party link checker (e.g., those offered by security vendors like NordVPN or VirusTotal). These services scan the destination URL against known lists of malicious domains and provide a safety rating.

If you encounter a shortened link (like those starting with bit.ly), first use a URL expander tool to reveal the full destination URL before submitting it to a link checker.

FAQs On AI-Driven Phishing

What is Typosquatting and why is it effective with AI search?

Typosquatting is the practice of registering domain names that are common misspellings or slight variations of famous brands (e.g., gooogle.com). It's effective with AI search because LLMs, trained on vast datasets, may inadvertently reference these close variants or domains that were previously legitimate but have since been acquired by scammers, making the difference hard for the user to spot in a summarized AI overview.

Can I force AI platforms like ChatGPT or Gemini to use high-quality sources?

Some AI services offer limited control. For instance, you can explicitly prompt the model to "Only cite verified, official sources with HTTPS" or ask it to "Provide the full, root domain of the company." While this may improve accuracy, it does not eliminate the risk, and users should always perform their own verification steps afterward.

What is the best way to report a phishing link generated by an AI Search result?

You should report the link directly to the AI platform provider (Google, OpenAI, etc.) using their feedback mechanisms to flag the result as malicious or inaccurate. Additionally, report the URL to global resources like Google Safe Browsing and the Anti-Phishing Working Group (APWG) to help protect other users.

Posting Komentar untuk "The AI Search Risk: How To Identify And Mitigate Phishing Scams In Generative Answers"